apisix clickhouse log 插件使用

最近在搞kubernetes中的apisix,apisix-ingress 的日志都是标准输出,想着给日志落盘,正好我们公司有clickhouse + grafana,所以想用clickhouse存储日志,然后用grafana展示。在实践中,发现了很多问题,下面我会一步一步来说怎么弄。

本文是结合官方文档来实践的,官方文档地址

创建 clickhouse log 对应的表

文档中并没有说 clickhouse 中的表该如何创建,只给了一个非常简单的表结构:

1 | |

毫不客气的说,这个表卵用没有,关键信息什么都没存储。没办法,只好去看源代码:

1 | |

可以看到发送到ck中的是 log_message,然后查看log_message 是哪里来的。

1 | |

这个 log_message 是从 log_util.get_log_entry(plugin_name, conf, ctx) 获取的。去看看 get_log_entry 这个方法:

1 | |

这个方法有个逻辑判断,如果在 metadata 中指定了 log_format 而且在 conf 中指定了 log_format,就使用 log_format,否则就使用默认的字段。就是get_full_log这个函数:

1 | |

可以看到默认的 log 就是上面哪些字段,基本就可以确定了 clickhouse table 的结构了。为了进一步确认,我先开启了file-log,然后查看了一下file-lgo的日志结构,如下:

1 | |

于是我们就可以创建对应的 table了:

1 | |

Tip: 如果自定义了 log_format ,请自己创建对应的表结构。

开启 clickhouse-log 插件

修改配置文件

首先要在apisix配置中开启clickhouse-log插件,因为我使用的是k8s,所以直接修改apisix这个configMap,修改之后重启apisix。

1 | |

然后重启 apisix,我是直接进入 apisxi pod 中直接重启的:

1 | |

在 apisix admin 中开启 clickhouse-log

我是搭建了apisix dashboard,所以直接在dashboard中开启的,下面是我的配置:

1 | |

开启之后,就可以去ck中查看数据了。

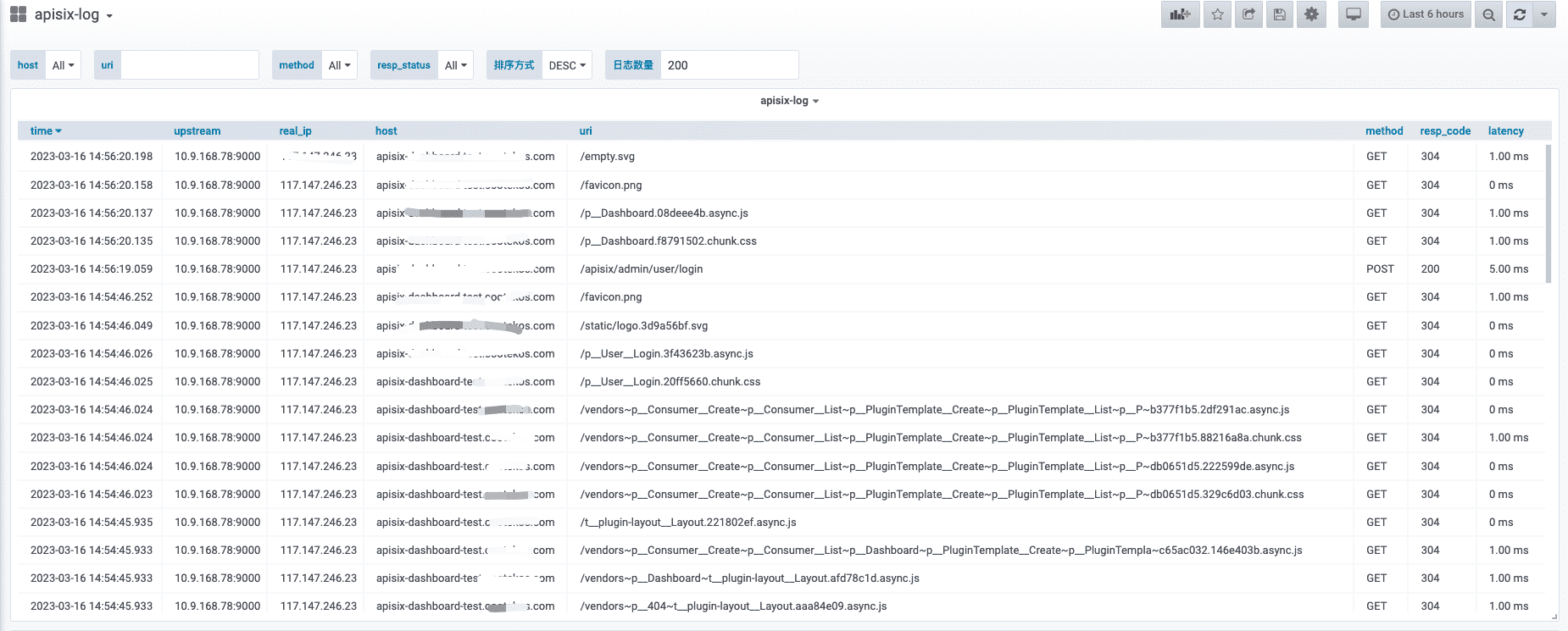

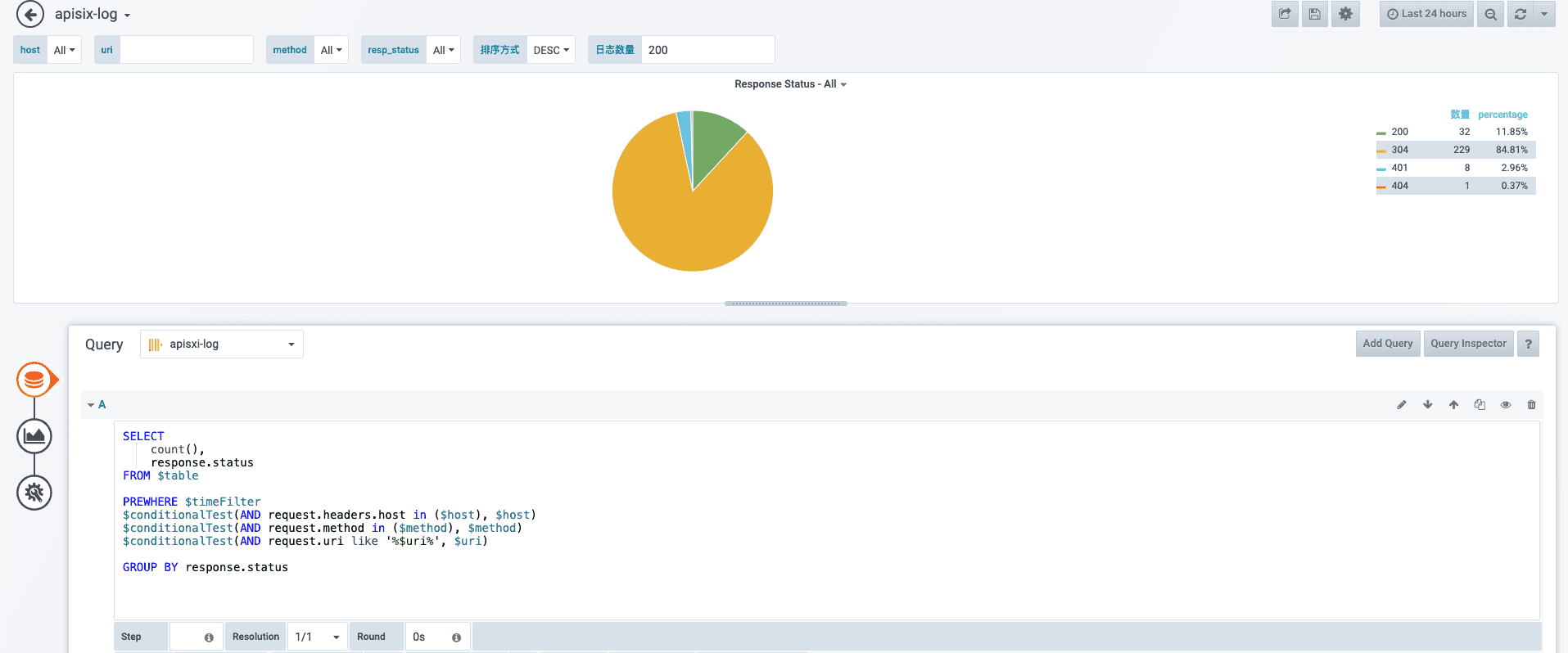

grafa 支持

上面就是 clickhouse 在grafana 中的展示,sql 如下:

1 | |

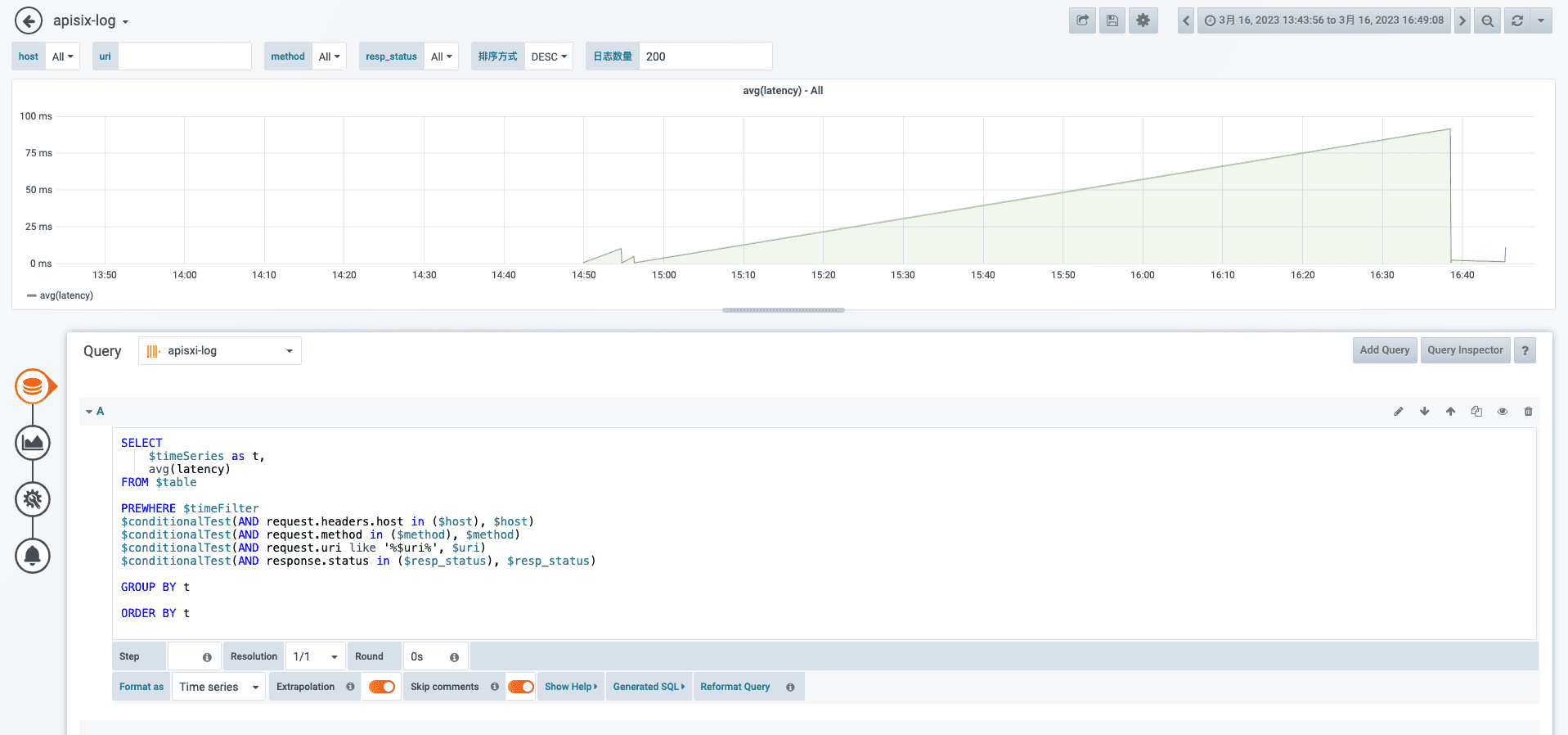

平均延迟

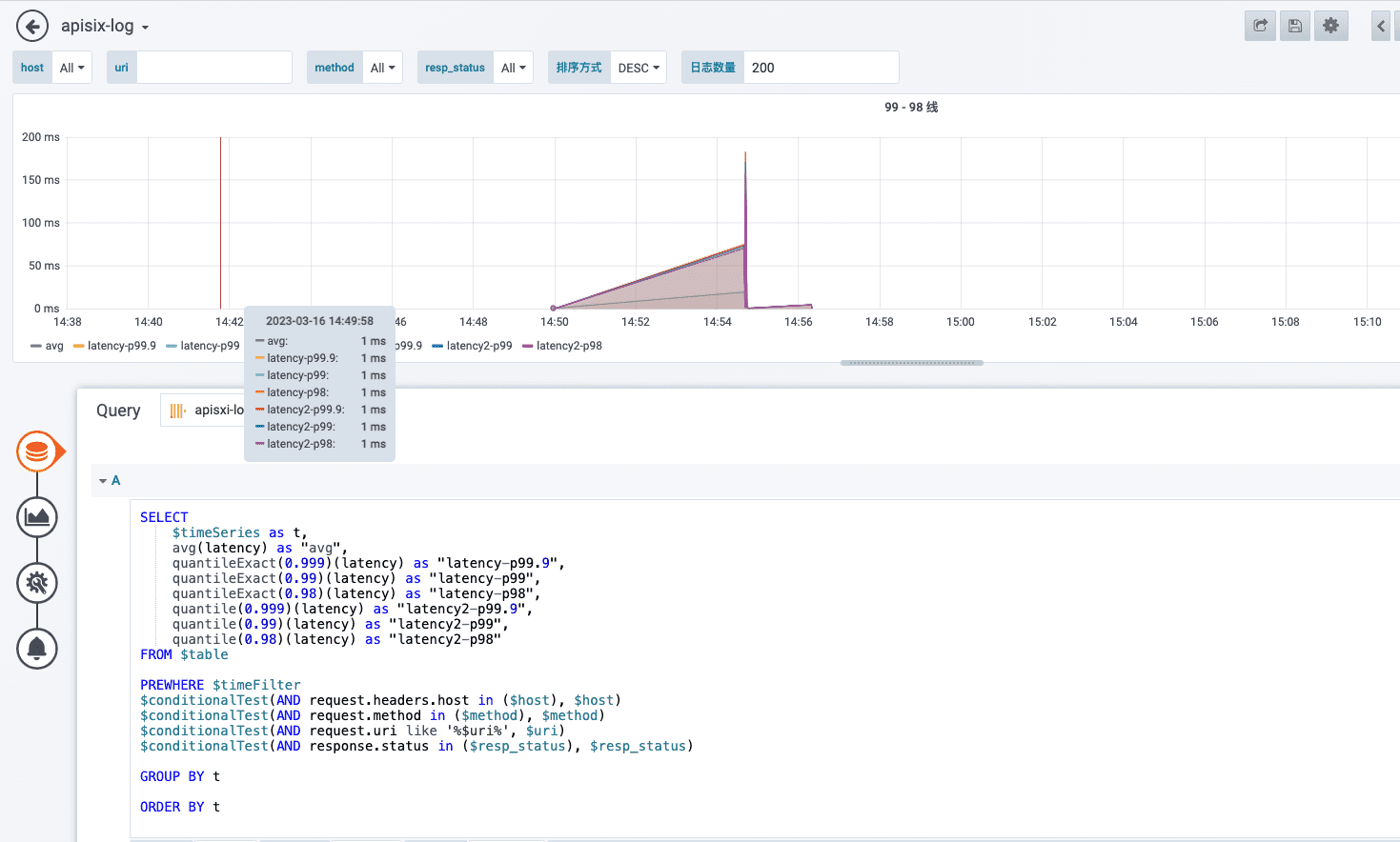

99线和50线

response code 统计

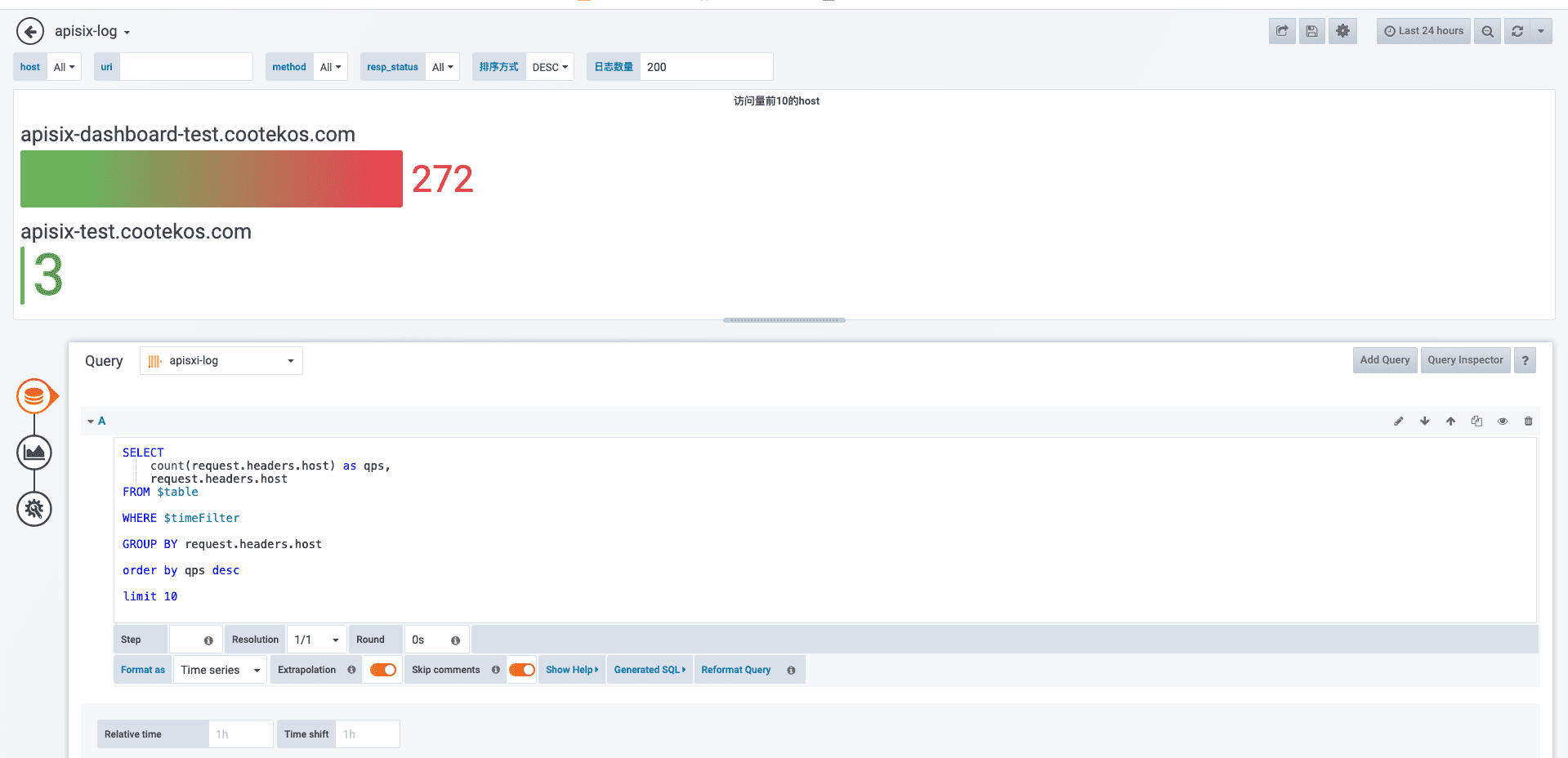

前十 QPS HOST 统计